As an early political system, democracy has its roots in ancient Greece, but over the millennia, it has continually developed alongside society. Considering this timescale, the technologies of artificial intelligence (AI) and machine learning, as well as the digital world – their natural environment – are very recent phenomena. Nevertheless, at times it seems like they are overtaking politics in the fast lane. While at least some leading politicians were still busy exploring the internet as “new territory” in 2013, breakthroughs in deep learning had already made it one of the most powerful tools available for dealing with digital data. Today, anyone who understands these tools and can apply them on a large scale gains power in the digital world.

We recently invited future experts in both areas – PhD-students in machine learning and their colleagues in politics and social sciences – for a discussion in which we asked them: How can we oversee a mutual process in which AI develops within a democracy?

Touchpoints between AI and democracy

Touchpoints between AI and democracy develop all the time, for example whenever political decision-making is influenced by an algorithm. This happens, for instance, when users of social networks during an election are presented with personalized adverts designed to influence – or manipulate – their decision about who to vote for. Democracy and algorithms also interact when politicians base their political decisions on modelling and projections of future developments. Modelling is used in science to be able to predict future developments under changing conditions. The accuracy of such predictive models is enhanced by machine learning, and in the future, these kinds of models will be used more and more in everyday politics, for example, to predict the development of a pandemic or the effects of interventions to combat climate change.

Some consider democracy to be fundamentally at risk from the dominance of the attention economy, in which the limited potential of human attention falls victim to the economic interest of companies. James Williams, who works on ethics in technology, argues that the continual bombardment of flashing adverts, entertainment options, and notifications on our smartphones robs us of our ability to concentrate on what is important, to set collective goals, and to make decisions that benefit society. This, again, is possible only with the help of machine learning, sophisticated algorithms, and powerful models that can optimize the user interfaces of social networks, apps, and streaming services toward maximum engagement (the interaction of the user with the digital product).

A completely different threat to democracy develops when AI gets into the wrong hands. The intelligence services of some autocratic states use AI-supported spyware to monitor individual human rights activists and journalists, as well as for intelligence activities in democratic states, for example by hacking politicians’ cellphones. It is particularly controversial that the software for such intrusions is partly developed by companies based in democratically organized states. On the other hand, this might make it possible for lawmakers to regulate the export of such software more effectively.

Regarding regulation: Another touchpoint between AI and democracy are regulatory interventions such as the General Data Protection Regulation (GDPR) by the European Union, or the Digital Services Act. With such laws, the European Union aims to achieve a greater balance of power between private enterprises and citizens. Maybe even more important than the actual success of such political actions is the signal they send, namely that politicians are increasingly attempting to regulate the digital space, and that we, as a free and democratic society, are in the process of developing an idea of how that can be achieved.

No more defensive responses!

Until now, the response by democracies to the digital world has been largely defensive, like responding to a threat. We are convinced, however, that democracies must leave this defensive stance behind and try to ‘own’ the digital space. If they do not, democracy’s slow, regulation-based interventions – which always lag behind technological developments – will leave the playing field to the large companies. And their primary interest is not the common good or a functioning democracy, but rather as much profit as possible. At the same time, the development of the digital space in a democracy offers enormous potential for positive, constructive contributions.

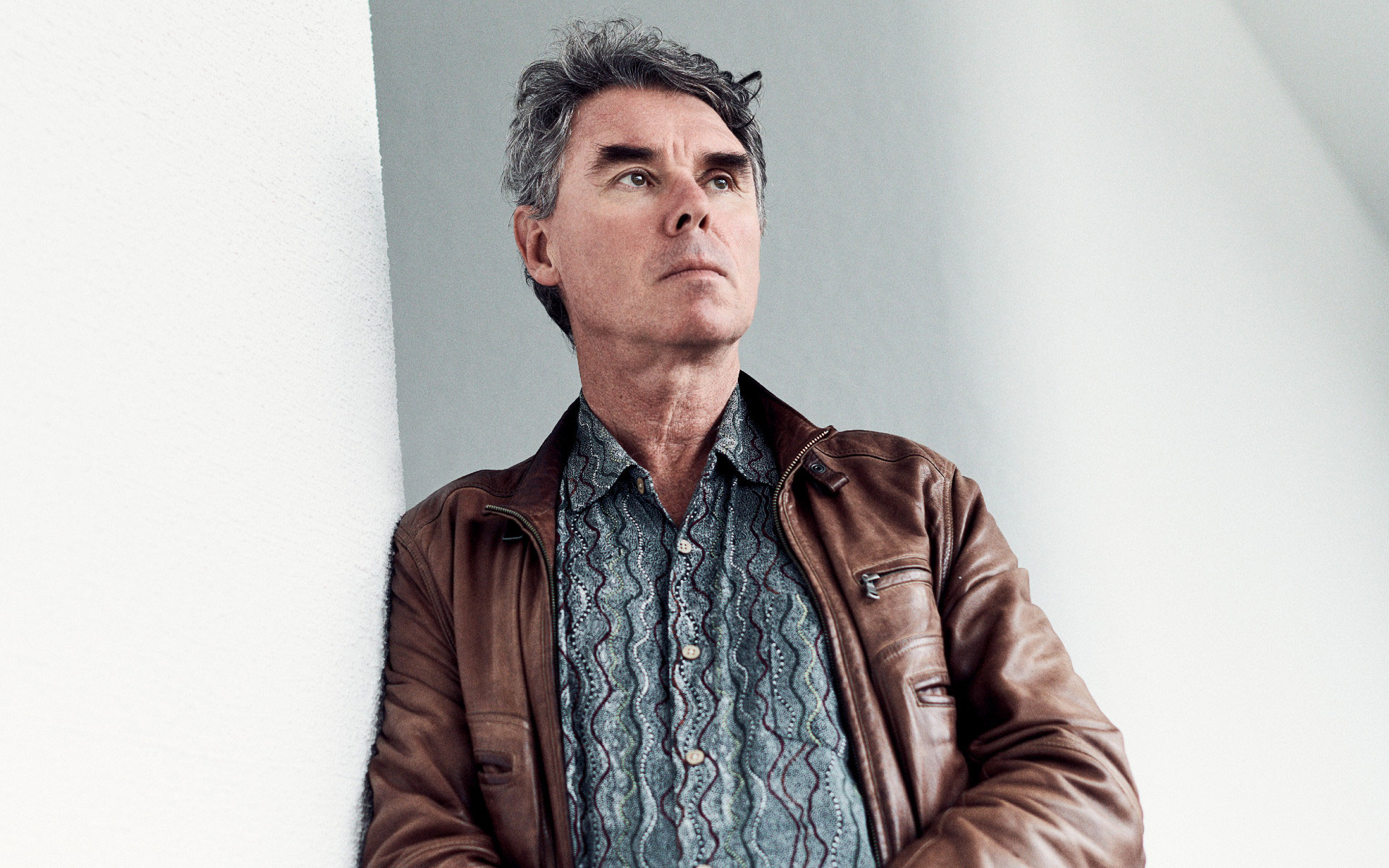

Larissa Höfling and Ilja Mirsky – advocating for a digitally competent democracy. © ELIA SCHMID / UNIVERSITY OF TÜBINGEN

A digitally anchored democracy

The lectures and discussions of this workshop provided a few starting points for further work in this field:

• Social networks, rolling news services, platforms for deliberation: There are many possibilities for political debate in the digital world – and they create an increasing amount of valuable data. Methods of machine learning in natural language processing can be applied to assess the functioning and quality of political debates and to identify factors that influence them in a positive or negative way. One regulation of the EU’s recent Digital Services Act should help considerably in facilitating this kind of research in the future, because it grants researchers access to the data the large providers of digital services (e.g. Meta, formerly known as Facebook) collect from their users.

• At present, recommender systems, i.e., systems that suggest content to users, are primarily implemented by companies to maximize their profits. There are, however, alternative goals to maximizing profit. To name just one example, a large German newspaper publisher supports research on the use of recommender systems to diversify the news that is suggested to readers/users. This might help pierce echo chambers, initiate political discourse between those who disagree, and balance out the increasing polarization of opinion.

• Researchers in computational social science could link up with social movements such as Fridays for Future to better understand how messages spread in social networks. This knowledge could be applied to spread important messages more effectively – a kind of AI-based activism.

Our responsibility as researchers and citizens

Even with the ideas suggested above, it doesn’t take much imagination to foresee how they could also be implemented to weaken democratic cohesion. Algorithms are ethically indifferent, and anti-democratic forces also have an interest in spreading their messages (e.g., right-wing, or populist propaganda) more effectively.

This is the most important finding of our workshop: We have a special responsibility as researchers and experts in AI, and also as citizens of democratic systems. We should continually reflect on technological developments through interdisciplinary dialogues and personal communication, and should get involved in decisions and discussions about when and where AI-systems are applied for whatever purpose.

Most of all, we must develop a vision of a digitally competent democracy and involve as many interested people as possible in the design and application of this vision. The question of how AI and democracy are going to develop in the long run has not yet been answered.

Larissa Höfling and Ilja Mirsky organized an interdisciplinary workshop for PhD-students at the University Tübingen on “Artificial Intelligence and Machine Learning Research and Democracy” .

Artificial Intelligence (AI) – Should it explain itself?

AI as Mediator

Comments